Neural Circuits Trained with Standard Reinforcement Learning Can Accumulate Probabilistic Information during Decision Making.

When making decisions, we often need to take several pieces of information into account. This paper describes how a network of nerve cells lying deep in the brain, called the basal ganglia, can learn how to efficiently weigh up different pieces of information during decision making, and so help the selection of the best actions.

Scientific Abstract

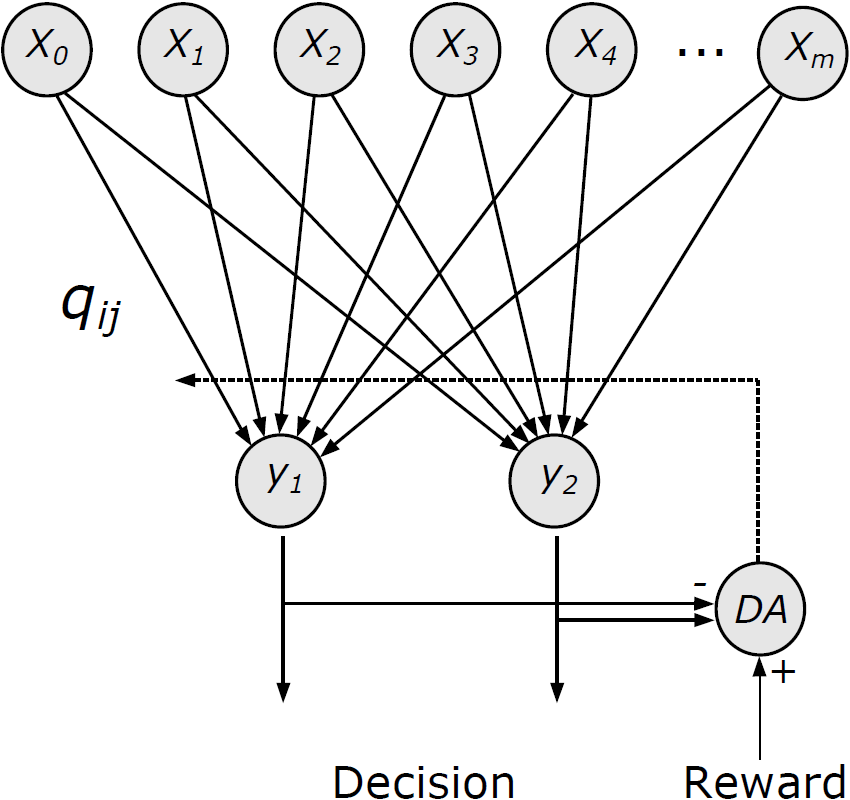

Much experimental evidence suggests that during decision making, neural circuits accumulate evidence supporting alternative options. A computational model well describing this accumulation for choices between two options assumes that the brain integrates the log ratios of the likelihoods of the sensory inputs given the two options. Several models have been proposed for how neural circuits can learn these log-likelihood ratios from experience, but all of these models introduced novel and specially dedicated synaptic plasticity rules. Here we show that for a certain wide class of tasks, the log-likelihood ratios are approximately linearly proportional to the expected rewards for selecting actions. Therefore, a simple model based on standard reinforcement learning rules is able to estimate the log-likelihood ratios from experience and on each trial accumulate the log-likelihood ratios associated with presented stimuli while selecting an action. The simulations of the model replicate experimental data on both behavior and neural activity in tasks requiring accumulation of probabilistic cues. Our results suggest that there is no need for the brain to support dedicated plasticity rules, as the standard mechanisms proposed to describe reinforcement learning can enable the neural circuits to perform efficient probabilistic inference.

Similar content

Preprint

Dithering suppresses half-harmonic neural synchronisation to photic stimulation in humans

Preprint

Striatal dopamine reflects individual long-term learning trajectories

Paper

Benchmarking Predictive Coding Networks - Made Simple

2025. International Conference on Learning Representations

Neural Circuits Trained with Standard Reinforcement Learning Can Accumulate Probabilistic Information during Decision Making.

When making decisions, we often need to take several pieces of information into account. This paper describes how a network of nerve cells lying deep in the brain, called the basal ganglia, can learn how to efficiently weigh up different pieces of information during decision making, and so help the selection of the best actions.

Scientific Abstract

Much experimental evidence suggests that during decision making, neural circuits accumulate evidence supporting alternative options. A computational model well describing this accumulation for choices between two options assumes that the brain integrates the log ratios of the likelihoods of the sensory inputs given the two options. Several models have been proposed for how neural circuits can learn these log-likelihood ratios from experience, but all of these models introduced novel and specially dedicated synaptic plasticity rules. Here we show that for a certain wide class of tasks, the log-likelihood ratios are approximately linearly proportional to the expected rewards for selecting actions. Therefore, a simple model based on standard reinforcement learning rules is able to estimate the log-likelihood ratios from experience and on each trial accumulate the log-likelihood ratios associated with presented stimuli while selecting an action. The simulations of the model replicate experimental data on both behavior and neural activity in tasks requiring accumulation of probabilistic cues. Our results suggest that there is no need for the brain to support dedicated plasticity rules, as the standard mechanisms proposed to describe reinforcement learning can enable the neural circuits to perform efficient probabilistic inference.

Citation

2017.Neural Comput, 29(2):368-393.

Free Full Text at Europe PMC

PMC5462093Downloads

Similar content

Preprint

Dithering suppresses half-harmonic neural synchronisation to photic stimulation in humans

Preprint

Striatal dopamine reflects individual long-term learning trajectories

Paper

Benchmarking Predictive Coding Networks - Made Simple

2025. International Conference on Learning Representations